After the socialist party upgraded civicrm to version 4.6 a month ago they are experiencing performance issues. In this blog I will round up our quest for performance improvements. But before that some facts about their installation.

- +/- 350.000 contacts

- +/- 300 users who can access civicrm and see members in their local chapter

- +/- 2700 groups

- There are several campaign websites linked to CiviCRM and one of their successfully campaigns leads to 7500 new contacts per week in the system

- Running on a VPS with 4 CPU cores and 8GB of RAM

- around 40 active extensions

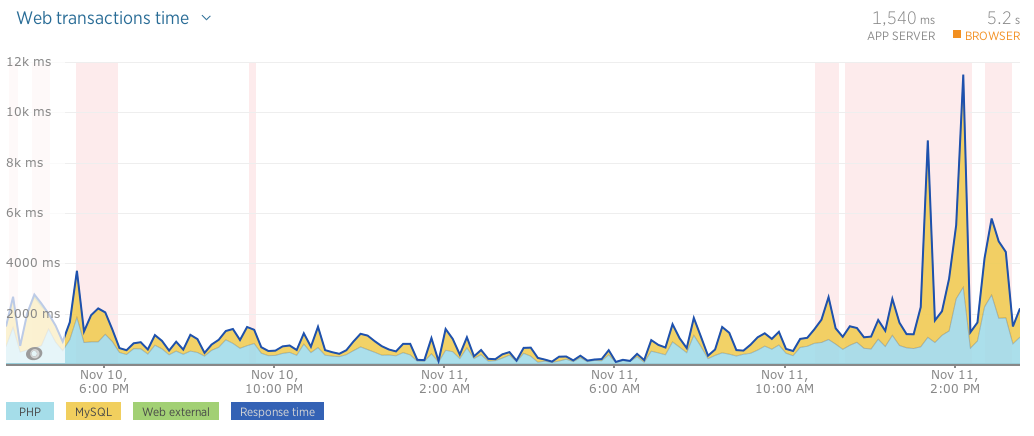

Since yesterday we have added New Relic as a monitoring tool. With New Relic we can monitor and look back in the history. We can also see all the details in each request. So we can analyze the performance.

Above is the screenshot of the monitoring of the last 24 hours. The red/rose squares indicates when the overall performance is poor (more than 2 seconds per request). We also see that MySQL has a big part in the largest peaks.

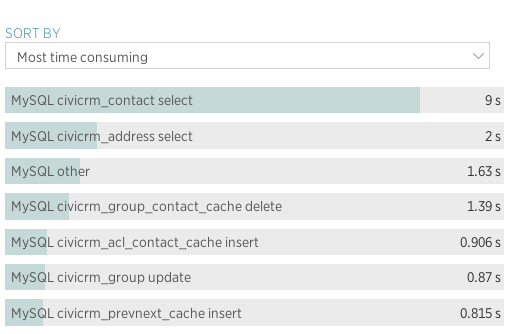

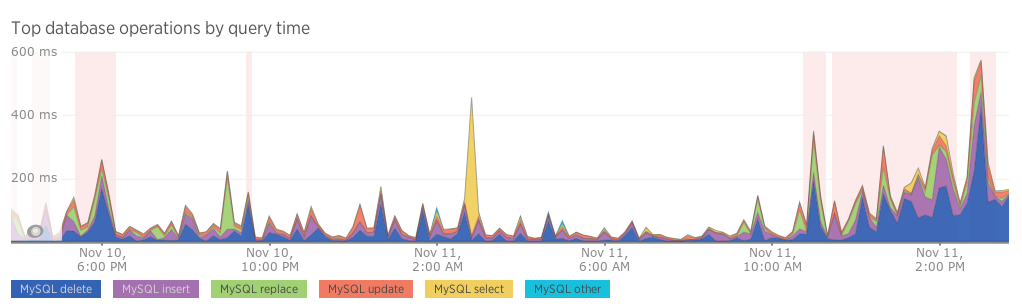

The screenshots above shows the slowest queries and the slowest mysql operations. One observation is that it looks like that the MySQL delete statements are slowing the system down.

It is not clear what exactly those delete statements are or what is causing those to be slow. That is one of the questions to look into next.

Another thing we want to look into is the tuning of the MySQL database configuration and we also want to get familiar with New Relic.

Do you have any thoughts? Or did you do any performance improvements you did? We can use any help and we will keep posted about our quest for performance.

Read more

- https://civicrm.org/blog/jaapjansma/the-quest-for-performance-improvements-2nd-sprint

- https://civicrm.org/blog/jaapjansma/the-quest-for-performance-improvements-3rd-sprint

- https://civicrm.org/blog/jaapjansma/the-quest-for-performance-improvements-4th-sprint

Comments

Cool pictures. Those mysql delete definitely look like your performance culprits, not only because of the graph but also because they are probably preventing a lot of caching and/or triggering cache rebuilds.

There are two things you'd want to do - the first and easy one is to make sure you've got innodb_flush_log_at_trx_commit = 2 in your mysql settings, that'll allow mysql to do things without first flushing everything on each commit.

The second and harder one is to identify the source of the deletions. I'd guess it's coming from the same source as all your new contacts. Turning on your mysql slow log might identify them.

The amount of time spent in the civicrm contact select is also a red flag, I've never seen that on my list. It might suggest a missing index, but more likely some code that's just not using it - often, complex views or smart groups can generate complex sql that can't use indices as you might expect.

What sort of throughput are you seeing in newrelic?

These queries all look pretty familiar

I have seen slow queries on select contact & other tables before - deriving from the stats queries.

I have spent some time of aspects of the delete queries in 4.7 (including the things mentioned here https://civicrm.org/blog/eileen/478-group-contact-cache-deadlocks-improvement) but my current belief is that upgrading to mysql 5.7 and getting mysql locks to work properly with that might be a good win. Mysql locks are a way of stopping two different php processes from competing to do the same thing. The theory is good but mysql < 5.7 has limitations that really block us for using them properly to prevent conflicting rebuilds

My other theory is the big inserts would perform better if we removed the id field from the cache tables. We don't use this field for joining but when inserting only one operation can lock the autoincrement index at once. I think that we might see improvement if, for example the primary key of group_contact_cache were to be contact_id-group_id-status and the id field was removed (I tried this on a dev site and had to make one minor code change - but I have not figured out how to really test the theory.

Note that while Fuzion has sites on 4.6 there are close to 100 patches on Fuzion, including performance patches that are in 4.7 not on 4.6. These do include a number of performance patches so my perspective is excluding those things that have already be solved upstream